Natural Language Processing (NLP) is an exciting field that empowers computers to process and generate human language. One of the foundational tools in NLP is the n-gram language model. Whether you’re working on text prediction, machine translation, or chatbot development, understanding n-gram models is essential. In this guide, we will explore the concept of n-gram models with real-world examples, practical applications, and insights into their strengths and limitations. This post uses the keyword “n gram language model example” to help you understand the topic in depth.

What Is an N-Gram Language Model?

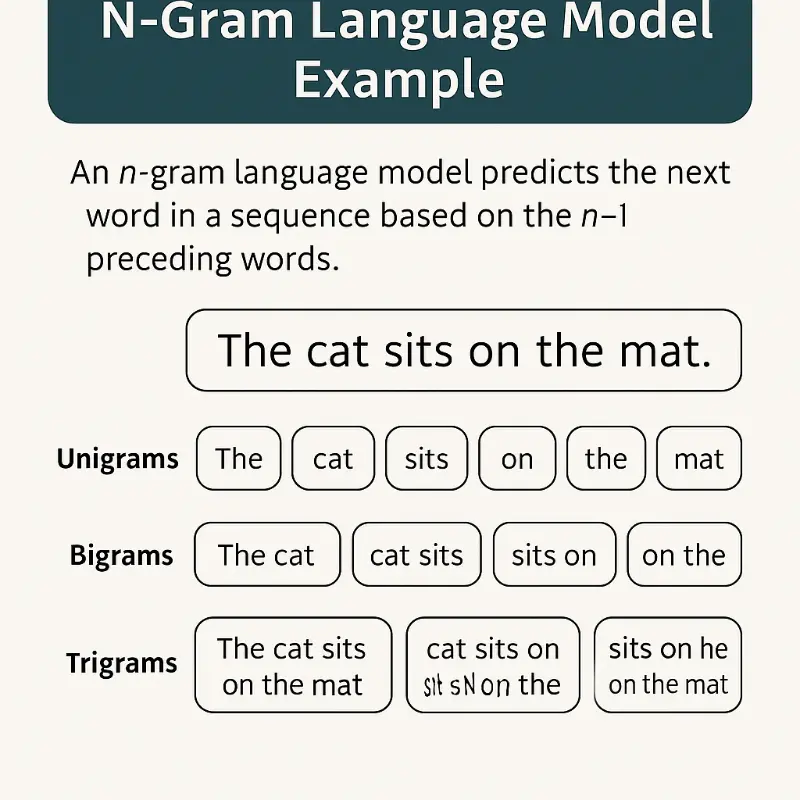

An n-gram language model is a statistical model used to predict the next word in a sequence based on the previous “n – 1” words. The model relies on a large corpus of text to calculate the likelihood of word sequences occurring together.

The “n” in n-gram stands for the number of words in the group:

- Unigram: a single word

- Bigram: a sequence of two words

- Trigram: a sequence of three words

- 4-gram and beyond: longer sequences of words

For instance, in the sentence “The dog barked loudly,” the bigrams are:

- “The dog”

- “dog barked”

- “barked loudly”

The core idea behind these models is that the probability of a word depends on its context, i.e., the words that precede it.

Why N-Gram Models Matter in NLP

N-gram models are used across various NLP applications because of their simplicity, efficiency, and interpretability. Here are a few reasons why they remain relevant:

- They form a strong statistical baseline for language modeling tasks.

- They can be trained quickly compared to neural network models.

- They are interpretable, making them useful for understanding language trends.

Despite the rise of deep learning models, n-gram models still hold importance in systems that require speed and transparency or operate with limited data and computing power.

N-Gram Language Model Example

Let’s walk through an easy-to-follow n gram language model example using a basic sentence and delve deeper into the steps involved in building and understanding a simple n-gram model.

We’ll use the sentence: “I enjoy learning about natural language processing.”

Step 1: Tokenization

The first step in building an n-gram model is tokenization, which involves splitting the sentence into individual words or tokens. This step ensures that each word is treated as a separate unit that can be counted and analyzed.

Tokens: [“I”, “enjoy”, “learning”, “about”, “natural”, “language”, “processing”]

Tokenization is essential in preprocessing because it standardizes input text for the model. Advanced tokenization may also include removing punctuation, converting to lowercase, or handling contractions.

Step 2: Generate Bigrams (2-grams)

Next, we create bigrams, which are pairs of adjacent words in the tokenized sentence. This step builds the basic structure of the bigram language model.

Bigrams:

- (“I”, “enjoy”)

- (“enjoy”, “learning”)

- (“learning”, “about”)

- (“about”, “natural”)

- (“natural”, “language”)

- (“language”, “processing”)

Bigrams help capture the relationship between consecutive words, which is critical for understanding context in language modeling.

Step 3: Count Unigrams and Bigrams

We now count the frequency of each unigram (single word) and bigram (two-word sequence).

Unigram Counts:

- “I”: 1

- “enjoy”: 1

- “learning”: 1

- “about”: 1

- “natural”: 1

- “language”: 1

- “processing”: 1

Bigram Counts:

- (“I enjoy”): 1

- (“enjoy learning”): 1

- (“learning about”): 1

- (“about natural”): 1

- (“natural language”): 1

- (“language processing”): 1

Although in this case each pair occurs only once, in a larger corpus some combinations will appear more frequently than others, helping the model learn common usage patterns.

Step 4: Calculate Conditional Probabilities

The crux of an n-gram model lies in calculating the conditional probability of a word given its context. For bigrams, this means estimating the likelihood of the second word occurring given the first.

P(“enjoy” | “I”) = Count(“I enjoy”) / Count(“I”) = 1 / 1 = 1.0

This same logic applies to the other bigrams. While this small dataset gives every bigram a probability of 1.0, real-world data introduces variability.

These probabilities help in predicting the next word based on prior context. For example, in predictive text, if a user types “natural,” the model might predict “language” based on its high probability of following “natural.”

Step 5: Generalization with a Larger Corpus

When working with a larger corpus, you’ll see a richer distribution of word pairs. This allows for more accurate and realistic modeling. For example, “machine learning” and “artificial intelligence” might appear many times, signaling strong word associations.

With larger data, you also encounter the issue of unseen n-grams. That’s where smoothing techniques are necessary to handle zero probabilities and ensure generalization.

The Data Sparsity Problem

One of the key limitations of n-gram models is data sparsity. Even in large corpora, there are many valid word sequences that simply don’t occur. When a model encounters an unseen n-gram, it assigns it a probability of zero, which can lead to significant performance issues.

This is where smoothing techniques come into play. These methods assign small probabilities to unseen n-grams to ensure the model remains robust.

Common Smoothing Methods

- Laplace (Add-One) Smoothing: Adds one to all counts to prevent zero probabilities.

- Add-k Smoothing: Similar to Laplace, but uses a smaller constant value.

- Good-Turing Smoothing: Redistributes probability mass from frequent to rare or unseen events.

- Kneser-Ney Smoothing: A more sophisticated method that considers the number of different contexts a word appears in.

Practical Applications of N-Gram Models

N-gram language models have been applied in various NLP tasks with real-world implications. Here are a few key use cases:

- Text Prediction: Smartphones use n-gram models to predict the next word while you’re typing. This helps improve typing speed and accuracy.

- Machine Translation: N-gram models assist in choosing the most likely word combinations when translating sentences from one language to another.

- Speech Recognition: When multiple words sound similar, the model uses n-gram probabilities to choose the most plausible sequence.

- Spell Checking and Grammar Correction: Language tools detect improbable sequences and suggest better alternatives.

- Chatbot Response Generation: Bots use n-gram models to predict suitable responses based on recent user inputs.

Evaluating N-Gram Language Models

The performance of a language model is often evaluated using a metric called perplexity. This measures how well the model predicts a sample. A lower perplexity indicates better predictive accuracy.

It’s important to test the model on unseen data to ensure it generalizes well and doesn’t just memorize training sequences.

Advantages of N-Gram Models

- Simplicity: Easy to implement and understand.

- Speed: Fast training and prediction.

- Interpretability: Transparent logic makes it easier to diagnose issues.

Limitations of N-Gram Models

- Data Sparsity: Many word sequences may not appear in training data.

- Fixed Context Window: Can’t model long-distance dependencies.

- Vocabulary Explosion: Higher n-values significantly increase model size.

N-Grams vs Neural Language Models

While n-gram models are fast and easy to use, they have largely been replaced by neural models in large-scale NLP applications. Deep learning models like RNNs, LSTMs, and Transformers can capture long-term dependencies and learn richer word representations.

That said, n-gram models still play an important role in situations where resources are limited or model transparency is critical.

Best Practices When Using N-Gram Models

- Start with bigrams or trigrams for simple tasks.

- Apply smoothing to prevent zero probabilities.

- Use a large and clean corpus for training.

- Evaluate using perplexity to gauge performance.

- Consider backoff or interpolation to combine different n-gram orders.

Conclusion

The n gram language model example we explored highlights how these models function and why they remain an essential tool in NLP. Despite the rise of complex neural networks, n-gram models offer a strong foundation for learning and prototyping. They are interpretable, efficient, and surprisingly powerful when paired with proper techniques like smoothing.

Whether you’re a student, data scientist, or NLP practitioner, mastering n-gram models will equip you with valuable insights and tools for a range of language processing tasks.