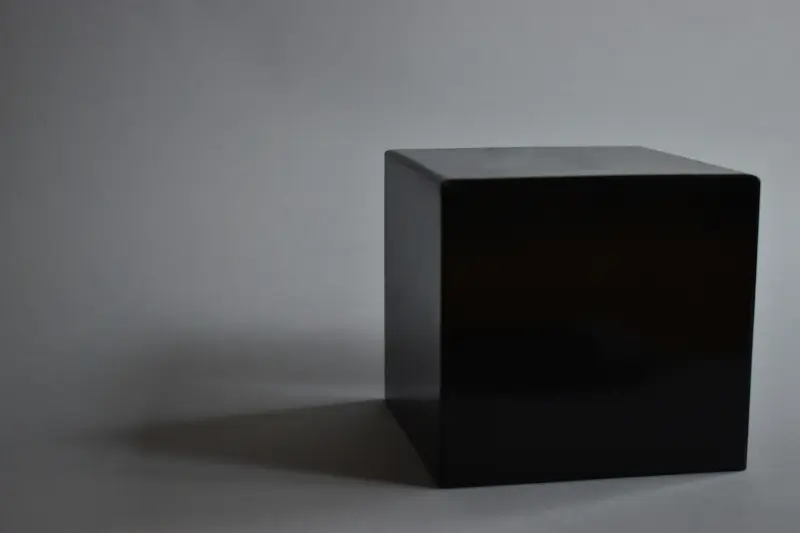

In the rapidly evolving landscape of artificial intelligence, we face a fundamental paradox. The most powerful AI models—deep neural networks, ensemble methods, and complex machine learning algorithms—often operate as “black boxes,” delivering impressive results while concealing their decision-making processes. This opacity creates a critical challenge: how can we trust and responsibly deploy AI systems when we cannot understand how they reach their conclusions?

Explainable AI (XAI) techniques for black box models have emerged as the crucial bridge between AI performance and human understanding. These methods don’t require rebuilding existing models from scratch but instead provide post-hoc explanations that illuminate the reasoning behind AI decisions. For organizations already invested in complex AI systems, these techniques offer a practical path to transparency without sacrificing the performance advantages that made black box models attractive in the first place.

The Black Box Challenge

Model-Agnostic Explanation Techniques

Model-agnostic explainable AI techniques represent the most versatile approach to understanding black box models. These methods work regardless of the underlying algorithm, treating the AI model as a function that maps inputs to outputs without needing to peer inside its architecture.

LIME: Local Interpretable Model-Agnostic Explanations

LIME stands as one of the most influential explainable AI techniques for black box models. The core insight behind LIME is elegantly simple: while a complex model’s global behavior might be incomprehensible, its local behavior around any specific prediction can be approximated by a simple, interpretable model.

The LIME process works by creating artificial data points in the neighborhood of the instance being explained, observing how the black box model responds to these variations, and then fitting a linear model to approximate the local behavior. For text classification, LIME might remove certain words and observe how predictions change. For image classification, it segments the image and systematically occludes different regions to understand which parts drive the decision.

What makes LIME particularly powerful is its flexibility across data types. Whether working with tabular data, text, or images, LIME can generate explanations by identifying the most influential features for any specific prediction. This local focus means that LIME explanations are highly relevant to the specific instance being examined, providing actionable insights for individual cases.

SHAP: Unified Framework for Feature Attribution

SHAP (SHapley Additive exPlanations) has revolutionized explainable AI by providing a mathematically rigorous foundation for feature attribution. Based on cooperative game theory, SHAP values represent the only attribution method that satisfies four essential properties: efficiency, symmetry, dummy feature, and additivity.

The mathematical elegance of SHAP lies in its game-theoretic foundation. Each feature is treated as a player in a cooperative game, and SHAP values represent the fair contribution of each player to the final outcome. This approach ensures that SHAP values always sum to the difference between the model’s prediction and the expected model output, providing a complete attribution of the model’s decision.

SHAP offers several computational approaches tailored to different scenarios. TreeSHAP provides efficient exact calculations for tree-based models, while KernelSHAP offers model-agnostic approximations. DeepSHAP combines the speed of gradient-based methods with the accuracy of SHAP values for neural networks. This variety ensures that SHAP can be applied effectively across the full spectrum of black box models.

The practical value of SHAP extends beyond individual predictions to global model understanding. By aggregating SHAP values across multiple instances, practitioners can identify the most important features across the entire dataset, understand feature interactions, and detect potential biases or unexpected behaviors in their models.

Permutation Importance: Understanding Feature Relevance

Permutation importance offers a straightforward yet powerful approach to understanding which features matter most to a black box model. This technique measures the decrease in model performance when each feature’s values are randomly shuffled, effectively breaking the relationship between that feature and the target variable.

The beauty of permutation importance lies in its simplicity and interpretability. If shuffling a feature causes a significant drop in model performance, that feature must be important for the model’s predictions. Conversely, if shuffling has little effect, the feature contributes minimally to the model’s decision-making process.

This technique proves particularly valuable for feature selection and model debugging. By identifying features with low permutation importance, data scientists can streamline their models without sacrificing performance. Additionally, unexpectedly high importance for seemingly irrelevant features can reveal data leakage or other modeling issues that might otherwise go undetected.

Gradient-Based Explanation Methods

For neural networks and other differentiable models, gradient-based explanation methods leverage the mathematical structure of these models to provide insights into their decision-making processes. These techniques use the model’s gradients to understand how changes in input features would affect the output.

Gradient Attribution and Integrated Gradients

Simple gradient attribution computes the partial derivative of the output with respect to each input feature, indicating how sensitive the prediction is to small changes in that feature. However, gradients can be noisy and may not reflect the true importance of features, particularly in deep networks with many layers and non-linear activations.

Integrated Gradients addresses these limitations by computing gradients along a path from a baseline input to the actual input, then integrating these gradients to obtain attribution scores. This approach satisfies two important axioms: sensitivity (important features receive non-zero attributions) and implementation invariance (functionally equivalent models receive identical attributions).

The baseline selection in Integrated Gradients significantly impacts the quality of explanations. Common baselines include zero vectors, average feature values, or domain-specific neutral inputs. The choice of baseline should reflect what constitutes a “neutral” or “uninformative” input in the specific problem domain.

GradCAM for Visual Models

For computer vision models, Gradient-weighted Class Activation Mapping (GradCAM) has become an essential tool for understanding which regions of an image drive model predictions. GradCAM combines the spatial information retained in convolutional layers with gradient information to produce localization maps highlighting the important regions for any target class.

The technique works by computing gradients of the target class score with respect to feature maps in the final convolutional layer, then using these gradients as weights to combine the feature maps into a single localization map. This process preserves spatial information while incorporating the model’s learned representations, resulting in heat maps that show exactly where the model is “looking” when making decisions.

GradCAM’s versatility extends beyond simple classification tasks. It can be adapted for multi-task models, detection systems, and even generative models, making it a universal tool for understanding visual AI systems. The resulting visualizations often reveal whether models are focusing on semantically meaningful regions or exploiting spurious correlations in the training data.

Key Techniques Comparison

Surrogate Models and Local Approximations

Surrogate modeling represents another powerful category of explainable AI techniques for black box models. These methods create interpretable approximations of complex models, either globally or locally, to provide insights into the original model’s behavior.

Global Surrogate Models

Global surrogate models attempt to approximate the entire behavior of a black box model using an interpretable algorithm such as linear regression, decision trees, or rule-based systems. The process involves using the black box model to generate predictions on a large dataset, then training the interpretable surrogate model to mimic these predictions.

The effectiveness of global surrogate models depends heavily on how well the simpler model can approximate the complex model’s behavior. High fidelity between the surrogate and original model indicates that the simpler model captures the essential decision-making patterns, making its interpretable structure a valid representation of the black box’s logic.

Decision trees serve as particularly effective global surrogates because they naturally segment the input space into interpretable regions. Each path from root to leaf represents a rule that can be easily understood and communicated to stakeholders. The tree structure also reveals the hierarchical importance of features and the interactions between them.

Anchors: High-Precision Rules

Anchors represent a sophisticated evolution of local explanation techniques, generating if-then rules that provide sufficient conditions for predictions with high confidence. Unlike LIME’s linear approximations, anchors create precise logical statements about when a model’s prediction can be trusted.

An anchor rule specifies a set of feature conditions that, when satisfied, virtually guarantee a particular prediction regardless of the values of other features. For example, an anchor might state “If age > 65 AND smoking = true, then high risk prediction with 95% confidence.” This rule-based format proves particularly valuable in high-stakes domains where precise understanding of decision boundaries is crucial.

The anchor generation process involves sophisticated search algorithms that identify minimal sets of features sufficient to anchor a prediction. This approach often reveals surprising insights about which combinations of features are truly decisive for model predictions, potentially highlighting redundant features or unexpected interaction effects.

Practical Implementation Considerations

Successfully implementing explainable AI techniques for black box models requires careful attention to computational efficiency, explanation quality, and integration with existing workflows. The choice of technique should align with specific use cases, stakeholder needs, and operational constraints.

Computational Efficiency and Scalability

Different explanation techniques have vastly different computational requirements. LIME requires training local models for each explanation, which can be time-consuming for large datasets. SHAP’s exact calculations can be computationally expensive for models with many features, though approximation methods can provide reasonable trade-offs between accuracy and speed.

Gradient-based methods generally offer superior computational efficiency for differentiable models, as they leverage existing model infrastructure to compute explanations. However, they’re limited to models where gradients are available and meaningful, excluding tree-based ensembles and other non-differentiable approaches.

Production deployment of explainable AI often requires pre-computing explanations for common scenarios or developing efficient approximation methods. Caching strategies, model distillation, and explanation summarization techniques can help manage computational overhead while maintaining explanation quality.

Explanation Quality and Validation

The quality of explanations cannot be assumed based solely on mathematical properties or algorithmic sophistication. Validation of explanation quality requires both quantitative metrics and qualitative assessment by domain experts. Perturbation-based validation can test whether explanations correctly identify influential features, while human studies can assess whether explanations improve understanding and decision-making.

Consistency across different explanation methods provides another important quality indicator. When LIME, SHAP, and gradient-based methods agree on feature importance rankings, confidence in the explanations increases. Significant disagreements warrant investigation and may reveal limitations in specific techniques or unexpected model behaviors.

The stability of explanations across similar inputs also affects their practical utility. Explanations that vary dramatically for nearly identical instances may indicate model instability or explanation method limitations, reducing trust and practical applicability.

Choosing the Right Explainable AI Technique

Selecting appropriate explainable AI techniques for black box models requires balancing multiple factors: the type of model being explained, the nature of the data, the specific use case requirements, and the technical constraints of the deployment environment.

For tabular data with moderate dimensionality, SHAP often provides the best combination of mathematical rigor and practical utility. Its game-theoretic foundation ensures fair attribution while supporting both local and global analysis. LIME serves as an excellent alternative when computational resources are limited or when explanation diversity is valuable for building confidence.

Computer vision applications typically benefit most from gradient-based methods like GradCAM, which provide intuitive spatial explanations that align with human visual processing. These techniques excel at revealing whether models focus on semantically meaningful regions or exploit spurious correlations in training data.

Text analysis applications often require specialized adaptations of general techniques. LIME’s text-specific implementation effectively handles word-level attributions, while attention-based explanations can provide insights into sequence models’ focus patterns across input tokens.

High-stakes applications such as medical diagnosis or financial lending may require multiple complementary explanation techniques to build sufficient confidence in model decisions. Combining rule-based anchors with gradient attributions and permutation importance can provide comprehensive coverage of different explanation perspectives.

The most effective approach often involves implementing multiple explanation techniques and using their agreement or disagreement as indicators of model reliability. When different methods consistently identify similar important features, confidence in both the model and explanations increases. When methods disagree significantly, this signals areas requiring additional investigation or model improvement.

Conclusion

Explainable AI techniques for black box models have evolved from academic curiosities to essential tools for responsible AI deployment. As organizations increasingly rely on complex AI systems for critical decisions, the ability to understand and explain these systems becomes not just a technical requirement but a business imperative. The techniques covered—from LIME’s local approximations to SHAP’s rigorous attributions, from gradient-based visualizations to surrogate models—provide a comprehensive toolkit for illuminating the decision-making processes of even the most complex AI systems.

The path forward requires balancing explanation quality with computational efficiency, choosing techniques that match specific use cases and stakeholder needs. As AI systems become more sophisticated and ubiquitous, explainable AI techniques will continue to evolve, but the fundamental principle remains unchanged: trustworthy AI requires understandable AI. By implementing these explainable AI techniques thoughtfully and systematically, organizations can harness the full power of black box models while maintaining the transparency and accountability that stakeholders rightfully demand.