Word embeddings have revolutionized how we represent language in machine learning, and Word2Vec stands as one of the most influential techniques in this space. However, understanding these high-dimensional representations can be challenging without proper visualization tools. This is where t-SNE (t-Distributed Stochastic Neighbor Embedding) becomes invaluable, offering a powerful way to visualize word2vec embeddings in two or three dimensions while preserving the semantic relationships between words.

Understanding Word2Vec Embeddings

Word2Vec transforms words into dense numerical vectors that capture semantic meaning through contextual relationships. Developed by Tomas Mikolov and his team at Google, this technique learns word representations by analyzing how words appear together in large text corpora. The fundamental principle is that words appearing in similar contexts tend to have similar meanings, which translates into similar vector representations.

The magic of Word2Vec lies in its ability to encode semantic relationships mathematically. Famous examples include the vector arithmetic: “king” – “man” + “woman” ≈ “queen”. These embeddings typically exist in high-dimensional spaces (commonly 100-300 dimensions), making them impossible to visualize directly without dimensionality reduction techniques.

Word2Vec employs two primary architectures: Continuous Bag of Words (CBOW) and Skip-gram. CBOW predicts a target word based on surrounding context words, while Skip-gram does the reverse, predicting context words from a target word. Both approaches result in dense vector representations that capture nuanced semantic relationships, but these high-dimensional vectors require sophisticated visualization techniques to interpret meaningfully.

The Challenge of High-Dimensional Visualization

High-Dimensional Challenge

Word2Vec embeddings typically exist in 100-300 dimensions

Human perception is limited to 2-3 dimensions

Solution: Dimensionality Reduction

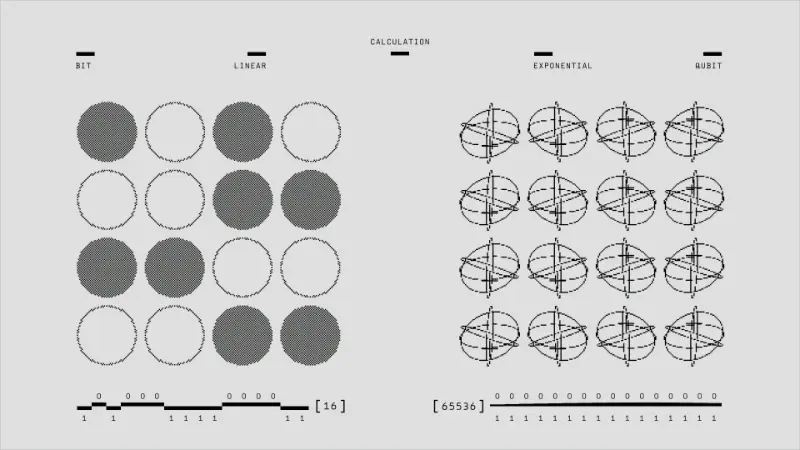

Traditional visualization techniques fail when dealing with high-dimensional data like word embeddings. Human perception is fundamentally limited to two or three spatial dimensions, making it impossible to directly visualize vectors in 100+ dimensional spaces. Simply projecting high-dimensional data onto lower dimensions using techniques like Principal Component Analysis (PCA) often results in significant information loss and can obscure important relationships.

The curse of dimensionality compounds this problem. As dimensions increase, the concept of distance becomes less meaningful, and points tend to become equidistant from each other. This makes it difficult to identify clusters or patterns that exist in the high-dimensional space. Moreover, linear dimensionality reduction techniques like PCA may not capture the non-linear relationships that exist in word embedding spaces.

Why t-SNE is Perfect for Word Embeddings

t-SNE addresses these challenges through its unique approach to dimensionality reduction. Unlike linear techniques, t-SNE preserves local neighborhood structures while revealing global patterns. This makes it particularly well-suited for word embeddings, where semantic relationships often form complex, non-linear patterns in high-dimensional space.

The algorithm works by converting high-dimensional Euclidean distances into conditional probabilities that represent similarities. It then creates a low-dimensional map that reproduces these probability distributions as faithfully as possible. This process naturally clusters semantically related words together while maintaining meaningful distances between different semantic groups.

t-SNE’s probabilistic approach is particularly effective for word embeddings because it emphasizes local similarities over global distances. This means that synonyms and semantically related words will appear close together in the visualization, while maintaining clear separation between different semantic categories. The technique’s ability to reveal non-linear relationships makes it superior to linear methods like PCA for understanding the complex semantic structure of word embeddings.

Implementing t-SNE Visualization: Step-by-Step Process

Data Preparation and Preprocessing

The first step involves loading your pre-trained Word2Vec model or training one from scratch. Popular libraries like Gensim make this process straightforward. Once you have your model, you’ll need to extract the word vectors and corresponding vocabulary. It’s crucial to decide which words to include in your visualization – using the entire vocabulary might result in cluttered plots, so focusing on the most frequent words or words relevant to your analysis is often more effective.

Preprocessing considerations include handling out-of-vocabulary words, deciding on case sensitivity, and potentially filtering words based on frequency thresholds. You might also want to remove stop words or focus on specific parts of speech depending on your analytical goals. The quality of your visualization heavily depends on these preprocessing decisions.

Configuring t-SNE Parameters

t-SNE has several important parameters that significantly impact the visualization quality:

Perplexity is perhaps the most critical parameter, typically set between 5 and 50. It roughly corresponds to the number of close neighbors each point has and should be smaller than the number of data points. For word embeddings, values between 20-50 often work well, but experimentation is key.

Learning rate controls how quickly the algorithm converges. Values between 10 and 1000 are common, with 200 being a good starting point. Too high values can cause the algorithm to get stuck in poor local minima, while too low values result in slow convergence.

Number of iterations determines how long the algorithm runs. More iterations generally lead to better results but require more computational time. 1000 iterations is typically sufficient for most word embedding visualizations.

Execution and Optimization

Running t-SNE on word embeddings can be computationally intensive, especially for large vocabularies. Consider using implementations with optimizations like Barnes-Hut approximation, which reduces computational complexity from O(n²) to O(n log n). Libraries like scikit-learn provide efficient implementations with these optimizations.

Memory management becomes crucial when working with large vocabularies. You might need to batch process words or use more memory-efficient data structures. Additionally, setting random seeds ensures reproducible results, which is important for comparing different parameter settings or sharing results with others.

Interpreting t-SNE Visualizations

Identifying Semantic Clusters

Well-executed t-SNE visualizations of word embeddings reveal distinct semantic clusters. You’ll typically observe groups of synonyms, words from the same category (like animals, colors, or emotions), and words that share syntactic properties clustering together. These clusters provide immediate insights into how the Word2Vec model has learned to represent different semantic concepts.

The distance between clusters in t-SNE space roughly corresponds to semantic dissimilarity, although this relationship isn’t perfectly linear. Clusters that appear close together often represent related semantic domains, while distant clusters represent conceptually different areas. This spatial organization makes it easy to identify potential issues with your word embeddings or discover unexpected semantic relationships.

Analyzing Relationships and Patterns

Beyond basic clustering, t-SNE visualizations can reveal more subtle patterns in word embeddings. You might observe linear or curved arrangements of words that represent semantic dimensions – for example, words arranged along a spectrum from positive to negative sentiment, or terms organized by intensity or magnitude.

Visualization Insights

✓ What to Look For:

- Tight semantic clusters

- Smooth transitions between related concepts

- Clear separation of different categories

- Meaningful spatial organization

⚠ Potential Issues:

- Scattered related words

- Artificial clustering artifacts

- Loss of important relationships

- Overemphasis on local structure

These patterns can help validate your Word2Vec model’s performance and identify areas where it might need improvement. For instance, if you notice that obviously related words are scattered across the visualization, it might indicate issues with your training data or model parameters.

Advanced Techniques and Best Practices

Interactive Visualizations

Static plots provide valuable insights, but interactive visualizations take the analysis to the next level. Tools like Plotly, Bokeh, or custom web applications allow users to hover over points to see word labels, zoom into specific regions, and dynamically filter the displayed words. This interactivity makes it easier to explore large vocabularies and identify specific words of interest.

Consider implementing features like search functionality to highlight specific words, color coding based on different attributes (frequency, part of speech, semantic category), and the ability to adjust visualization parameters in real-time. These features make your visualizations more accessible and useful for different audiences.

Comparative Analysis

t-SNE visualizations become even more powerful when used for comparative analysis. You can visualize embeddings from different models, time periods, or domains to understand how word representations change. This approach is particularly valuable for studying semantic drift over time or comparing domain-specific embeddings.

When creating comparative visualizations, ensure consistent preprocessing and parameter settings across different datasets. Consider using techniques like Procrustes analysis to align different embedding spaces before visualization, making comparisons more meaningful.

Validation and Quality Assessment

Always validate your t-SNE visualizations against known semantic relationships. Create test sets of word pairs with known similarity scores and check whether these relationships are preserved in your visualization. This validation step helps ensure that your visualization accurately represents the underlying embedding space.

Consider using multiple random initializations and parameter settings to ensure your results are robust. t-SNE can sometimes produce different results based on random initialization, so running multiple experiments helps identify stable patterns versus artifacts of the algorithm.

Common Pitfalls and Solutions

Over-interpretation of Distances

One of the most common mistakes is over-interpreting the distances in t-SNE plots. While the algorithm preserves local neighborhoods well, global distances can be misleading. Two points that appear close in the t-SNE visualization aren’t necessarily similar in the original high-dimensional space if they’re not part of the same local neighborhood.

To address this, focus on cluster formation rather than specific distances. Use the visualization to identify groups of related words rather than making precise quantitative comparisons between distant points. When precise distance relationships are important, consider supplementing t-SNE with other visualization techniques or quantitative analysis.

Parameter Sensitivity

t-SNE results can vary significantly with different parameter settings, particularly perplexity. Always experiment with multiple perplexity values and examine how they affect your visualization. Lower perplexity values emphasize local structure, while higher values reveal more global patterns.

Document your parameter choices and their rationale. This documentation becomes crucial when sharing results or reproducing analysis. Consider creating multiple visualizations with different parameter settings to provide a more comprehensive view of your data.

Computational Considerations

Large vocabularies can make t-SNE computationally prohibitive. Consider strategies like hierarchical visualization, where you first cluster words using faster methods and then apply t-SNE to representatives from each cluster. Alternatively, focus on subsets of your vocabulary based on frequency, relevance, or other criteria.

Modern implementations offer various optimization techniques, including approximate algorithms and GPU acceleration. Leverage these tools when working with large datasets, but be aware that approximations might affect the quality of your results.

Conclusion

Visualizing Word2Vec embeddings with t-SNE provides powerful insights into the semantic structure captured by these models. The technique’s ability to preserve local neighborhoods while revealing global patterns makes it ideally suited for understanding high-dimensional word representations. By following best practices in parameter selection, preprocessing, and interpretation, you can create visualizations that effectively communicate the semantic relationships in your word embeddings.

The combination of Word2Vec and t-SNE has become an essential tool in natural language processing, enabling researchers and practitioners to validate models, explore semantic relationships, and communicate findings effectively. As both techniques continue to evolve, this powerful combination will remain valuable for understanding and visualizing the complex world of word embeddings.

Remember that t-SNE visualization is just one tool in your analysis toolkit. Combine it with quantitative evaluation metrics, domain expertise, and other visualization techniques to gain comprehensive insights into your word embeddings. The goal is not just to create beautiful visualizations, but to develop deeper understanding of how language models capture and represent semantic meaning.