If you’ve been exploring machine learning, natural language processing, or artificial intelligence, you’ve likely encountered the terms “embeddings” and “vectors.” While these terms are often used interchangeably in casual conversation, they represent distinct concepts that are crucial to understanding modern AI systems. Let’s dive deep into the difference between embeddings and vectors, exploring their relationship, unique characteristics, and practical applications.

What Are Vectors?

At its core, a vector is a mathematical object that represents a quantity with both magnitude and direction. In the context of machine learning and data science, vectors are typically represented as ordered lists of numbers, which we can think of as coordinates in a multi-dimensional space.

For example, a simple two-dimensional vector might look like [3, 5], representing a point in 2D space. In machine learning applications, vectors can have hundreds or even thousands of dimensions, such as [0.25, -0.18, 0.92, …, 0.41].

Vectors are fundamental building blocks in mathematics and computer science. They can represent anything from pixel values in an image to features extracted from data. The key characteristic of a vector is that it’s simply a numerical representation—a container for numbers arranged in a specific order. Vectors don’t inherently carry semantic meaning; they’re just mathematical structures that follow specific rules for operations like addition, multiplication, and distance calculation.

Common Uses of Vectors

Vectors appear throughout computer science and mathematics in various forms:

- Coordinate systems: Representing positions in space, whether 2D, 3D, or higher dimensions

- Feature representations: Storing measurements or characteristics of data points, such as height, weight, and age as [170, 65, 28]

- Image data: Pixels in an image can be flattened into a single vector of RGB values

- Statistical data: Collections of measurements or survey responses organized as numerical arrays

The versatility of vectors makes them indispensable tools, but they become particularly powerful when they’re used to represent meaning—which brings us to embeddings.

What Are Embeddings?

Embeddings are a special type of vector that serves a specific purpose: representing complex, high-dimensional data (like words, sentences, images, or users) in a lower-dimensional continuous vector space while preserving meaningful relationships and semantic properties.

The crucial distinction is that embeddings are learned representations. They’re not arbitrary collections of numbers—they’re carefully constructed through training processes to capture the essence and relationships of the data they represent. When we create embeddings, we’re translating complex information into numerical form in a way that similar items end up with similar vector representations.

Consider word embeddings as a classic example. The word “king” might be represented as a 300-dimensional vector like [0.13, -0.27, 0.45, …, 0.82]. This isn’t random; each dimension captures some latent feature or characteristic of the word. Remarkably, when properly trained, these embeddings exhibit fascinating properties. The famous example is: vector(“king”) – vector(“man”) + vector(“woman”) ≈ vector(“queen”).

How Embeddings Capture Meaning

Embeddings work by mapping discrete or complex objects into a continuous vector space where the geometric relationships between vectors reflect semantic or functional relationships between the objects they represent. This is achieved through various training methods:

Neural network training: Most modern embeddings are learned as part of neural network training. For instance, Word2Vec learns word embeddings by training a model to predict words based on their context. The network’s internal representations (the embedding layer) learn to place semantically similar words close together in vector space.

Dimensionality reduction: Some embedding techniques start with high-dimensional representations and compress them while preserving important relationships. The goal is to find a lower-dimensional space that maintains the essential structure of the data.

Distance preservation: Good embeddings ensure that similar items are close together in the vector space. If “dog” and “puppy” are semantically related, their embedding vectors will have a small distance between them (typically measured using cosine similarity or Euclidean distance).

The power of embeddings lies in this preservation of relationships. When you compute the distance between embedding vectors, you’re actually measuring semantic similarity, functional similarity, or whatever relationship the embedding was trained to capture.

The Relationship Between Embeddings and Vectors

Here’s where the distinction becomes clear: all embeddings are vectors, but not all vectors are embeddings. An embedding is a vector with a specific purpose and properties—it’s a semantically meaningful representation learned from data.

Think of it this way: “vector” is the general mathematical term for an ordered list of numbers, while “embedding” is a specialized application of vectors in machine learning. It’s similar to how all squares are rectangles, but not all rectangles are squares.

A random collection of numbers arranged in order is a vector. A carefully learned representation of the word “artificial” that places it near “synthetic” and “human-made” in semantic space is an embedding (which is also a vector).

How Word Embeddings Capture Semantic Relationships

[0.82, -0.34, …]

[0.79, -0.31, …]

[0.15, 0.67, …]

[0.18, 0.71, …]

Key Distinguishing Characteristics

Purpose and intent: Vectors are general-purpose mathematical objects used for countless applications. Embeddings have a specific purpose: representing complex entities in a way that captures their meaning and relationships.

How they’re created: Vectors can be created manually, calculated directly, or generated through any process. Embeddings are specifically learned through training processes designed to capture semantic or structural properties of data.

Semantic meaning: Standard vectors might represent arbitrary features or measurements without inherent relationships. Embeddings are constructed so that the geometric properties of the vector space reflect meaningful relationships in the original data domain.

Dimensionality considerations: While vectors can be any dimension, embeddings typically involve dimensionality reduction—taking high-dimensional or discrete data and representing it in a lower-dimensional continuous space (though still often in hundreds of dimensions).

Practical Examples That Illustrate the Difference

Let’s explore concrete examples that highlight the distinction between embeddings and vectors.

Example 1: Representing Text

Vector approach: You could represent the sentence “The cat sat” using a bag-of-words vector. If your vocabulary has 10,000 words, you’d create a 10,000-dimensional vector where each position corresponds to a word, and the value is 1 if that word appears in the sentence, 0 otherwise. This is a vector, but it’s sparse, high-dimensional, and doesn’t capture any semantic meaning or word relationships.

Embedding approach: With sentence embeddings (like those from BERT or Sentence-BERT), “The cat sat” becomes a dense 768-dimensional vector where similar sentences have similar embeddings. The sentence “A feline was seated” would have an embedding close to “The cat sat” in vector space, even though they share no common words. This captures meaning, not just word presence.

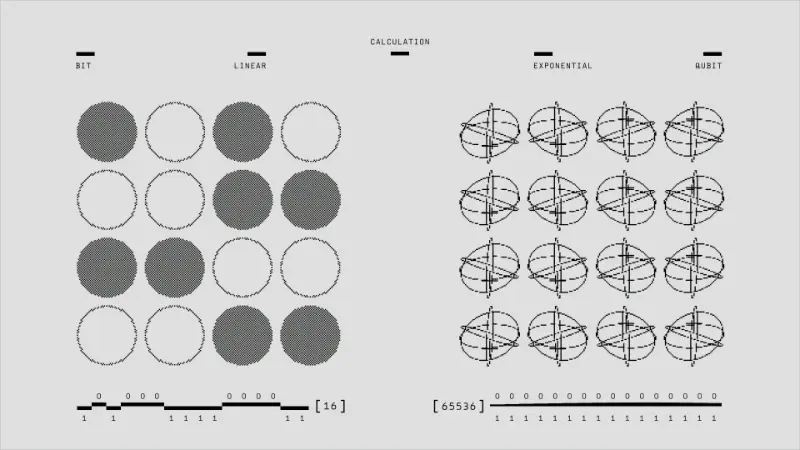

Visual Comparison: Vectors vs Embeddings

• Direct measurements

• No learned meaning

• Human-defined features

• Easy to interpret

• Learned from data

• Captures relationships

• Semantic meaning

• Similar items are close

Example 2: User Preferences

Vector approach: A user’s movie ratings could be stored as a vector: [5, 3, 4, 2, …] where each position represents their rating for a specific movie. This is straightforward data storage using vectors.

Embedding approach: A recommendation system might learn user embeddings where each user is represented as a dense vector in a latent space. Users with similar taste would have similar embeddings, even if they haven’t rated the same movies. The system learns to embed users based on patterns in their behavior, capturing implicit preferences and similarities.

Example 3: Image Data

Vector approach: An image can be represented as a vector by flattening all pixel values into a single array. A 100×100 RGB image becomes a 30,000-dimensional vector (100 × 100 × 3 color channels). This is a direct vectorization of the data.

Embedding approach: A deep learning model like ResNet can generate image embeddings where each image is represented as a 512-dimensional vector that captures high-level visual concepts. Similar images (like two different photos of cats) will have similar embeddings, even if their pixel-level vectors are completely different.

Why Understanding This Distinction Matters

Grasping the difference between embeddings and vectors is more than academic—it has practical implications for how you approach machine learning problems and understand AI systems.

Model Architecture Decisions

When designing machine learning models, you need to know whether you’re working with raw feature vectors or learned embeddings. This affects:

- Input processing: Raw vectors might need scaling or normalization, while embeddings often come pre-trained and ready to use

- Model complexity: Models using embeddings can often be simpler because the embeddings already capture complex relationships

- Transfer learning: Pre-trained embeddings allow you to leverage knowledge from large datasets, something not possible with simple feature vectors

Performance and Efficiency

Embeddings enable efficient similarity search and comparison in ways that raw vectors often cannot. When you have embeddings, you can use techniques like approximate nearest neighbor search to quickly find similar items among millions of candidates. This powers everything from search engines to recommendation systems.

Additionally, embeddings typically require fewer dimensions than one-hot encoded vectors or raw feature representations, leading to reduced memory usage and faster computation.

Interpretability and Debugging

Understanding that you’re working with embeddings helps in model interpretation. When debugging a recommendation system, knowing that user and item embeddings should place similar entities close together gives you a clear diagnostic tool. You can visualize embeddings, examine nearest neighbors, and perform embedding arithmetic to understand what your model has learned.

Common Embedding Techniques and Their Vector Properties

Different embedding methods create vectors with different properties, tailored to specific types of data and tasks.

Word Embeddings

Word2Vec, GloVe, and FastText create dense vector representations of words where semantic similarity corresponds to vector similarity. These typically use 50 to 300 dimensions. The vectors exhibit remarkable properties like analogical reasoning: the vector relationship between “Paris” and “France” is similar to that between “Rome” and “Italy.”

Contextual Embeddings

BERT and GPT-based models create contextualized embeddings where the same word gets different vectors depending on context. The word “bank” in “river bank” gets a different embedding than in “savings bank.” These are higher-dimensional (often 768 or 1024 dimensions) and capture nuanced meaning.

Graph Embeddings

Node2Vec and GraphSAGE create embeddings for nodes in a graph, where connected or structurally similar nodes have similar embeddings. These enable machine learning on graph-structured data like social networks or knowledge graphs.

Visual Embeddings

Convolutional neural networks create image embeddings that capture visual features at various levels of abstraction. Earlier layers might capture edges and textures (low-level features), while deeper layers capture complex objects and scenes (high-level semantic features).

Working With Embeddings vs. Generic Vectors in Practice

In real-world applications, the distinction between embeddings and vectors influences how you structure your data pipeline and model architecture.

When working with generic vectors, you typically focus on feature engineering—manually crafting representations that capture relevant information. You might normalize values, handle missing data, and combine features thoughtfully. The vectors are tools for organizing your data.

When working with embeddings, you’re often either using pre-trained embeddings (transfer learning) or training an embedding layer as part of your model. The focus shifts from manual feature creation to learning good representations. You might fine-tune embeddings on your specific task or freeze them and use them as fixed features.

For similarity-based tasks, embeddings shine because they’re specifically designed to make similar items close in vector space. If you’re building a semantic search engine, recommendation system, or duplicate detection system, embeddings are typically the right choice. Generic feature vectors might not capture the kind of similarity you care about.

Conclusion

The difference between embeddings and vectors ultimately comes down to purpose and properties. Vectors are the mathematical foundation—ordered collections of numbers that can represent any quantitative information. Embeddings are specialized vectors designed to capture meaning, learned through training to preserve semantic or structural relationships in a continuous space.

Understanding this distinction empowers you to make better decisions in machine learning projects, choose appropriate techniques for your problems, and communicate more precisely about AI systems. Whether you’re building a search engine, creating a recommendation system, or working on natural language understanding, knowing when you need embeddings versus simple vectors makes all the difference in creating effective solutions.