In modern machine learning and artificial intelligence, neural networks are being utilized as powerful tools to mimic the workings of the human brain. These computational models, with their interconnected network of artificial neurons, have revolutionized various fields, from natural language processing to computer vision. With diverse architectures catering to specific tasks, such as speech recognition, image classification, and medical diagnosis, neural networks come in different forms, including basic feed-forward networks, convolutional networks tailored for image processing, and recurrent networks adept at handling temporal sequences.

This article discusses, in depth, the types of neural network models and how they tackle complex problems, learn from large amounts of data, and emulate the intricate workings of the human mind.

Artificial Neural Networks

Artificial neural networks (ANNs) have emerged as indispensable tools in the realm of artificial intelligence (AI) and machine learning (ML). They propelled advancements in various domains by their ability to mimic the intricate functioning of the human brain. These computational models, inspired by the biological neurons and their interconnected networks, have become synonymous with the pursuit of pattern recognition, data analysis, and complex problem-solving. Within the vast landscape of ANNs, diverse architectures cater to specific tasks, each designed to excel in its designated domain.

Importance in AI and ML

ANNs play a pivotal role in driving the advancements in AI and ML, enabling machines to learn from data, recognize patterns, and make informed decisions autonomously. By leveraging the collective processing power of interconnected neurons, ANNs have revolutionized fields like image recognition, natural language processing, and predictive analytics. Their ability to adapt and improve over time through the learning process, fueled by training data and optimization algorithms, makes them the important tools for tackling complex real-world problems.

Diverse Types of Neural Network Models

Within the realm of ANNs, a multitude of models exists, each tailored to address specific tasks and challenges.

- Feed-forward neural networks, characterized by their forward data flow from input to output layer, excel in tasks such as image classification and speech recognition.

- Convolutional neural networks (CNNs), with their specialized architecture comprising convolutional layers and pooling layers, dominate the field of computer vision, enabling tasks like object detection and image segmentation.

- Recurrent neural networks (RNNs), equipped with feedback loops and memory cells, prove instrumental in handling sequential data, making them ideal for tasks such as language translation, time series analysis, and speech synthesis.

- Deep neural networks (DNNs), with their numerous hidden layers, delve into the depths of complex relationships within data, offering unparalleled accuracy in tasks ranging from medical diagnosis to financial forecasting.

Let’s learn each type more in detail.

Feed-forward Neural Networks

Feed-forward neural networks (FFNNs) are characterized by their sequential flow of data from input to output layers without any feedback loops.

Architecture of Feed-forward Networks

FFNNs consist of an input layer, one or more hidden layers, and an output layer. Each layer comprises multiple neurons, also known as nodes, interconnected with weighted connections. Neurons within a layer are fully connected to neurons in the subsequent layer, forming a dense network of connections. The role of activation functions is pivotal in determining the output of each neuron and introducing non-linearity into the network.

Forward Propagation Process

Forward propagation is the mechanism through which data flows sequentially from the input layer through the hidden layers to the output layer. At each neuron, the weighted sum of inputs, along with a bias term, is computed and passed through an activation function to produce the neuron’s output. This process continues layer by layer until the final output is generated by the output layer. The output of the network is compared to the ground truth labels using a loss function to measure the network’s performance.

Use Cases and Applications

FFNNs find extensive use in various domains, including image classification, speech recognition, and natural language processing. In image classification tasks, FFNNs analyze pixel values of input images and classify them into predefined categories. Speech recognition systems utilize FFNNs to process audio signals and convert them into textual representations. FFNNs also excel in pattern recognition and data classification tasks, where they analyze complex data patterns to make accurate predictions or classifications.

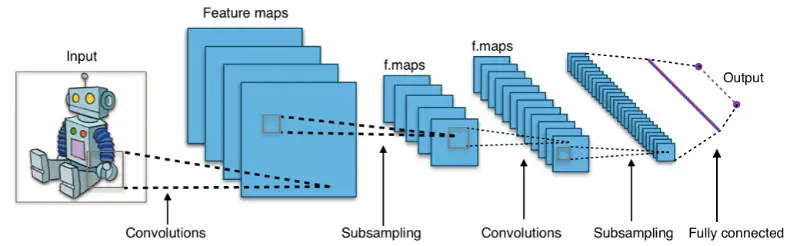

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a breakthrough in artificial neural networks, particularly tailored to excel in tasks involving image data analysis and processing. With their specialized architecture and innovative design principles, CNNs have transformed the landscape of computer vision and image processing, enabling machines to perceive, understand, and interpret visual information with remarkable accuracy.

CNNs are a class of deep learning models specifically designed to process structured grid data, such as images, by leveraging the principles of convolution and hierarchical feature extraction. Unlike traditional feed-forward neural networks, CNNs preserve the spatial structure of input data and exploit local correlations present in images.

Architecture of CNNs

- Convolutional Layers: The core building blocks of CNNs, convolutional layers consist of learnable filters that slide across the input data, extracting features through convolution operations. Each filter detects specific patterns, such as edges or textures, contributing to the creation of feature maps.

- Pooling Layers: Following convolutional layers, pooling layers downsample the feature maps, reducing their spatial dimensions while retaining the most salient information. Common pooling operations include max pooling and average pooling.

Applications in Computer Vision and Image Processing

CNNs have revolutionized various computer vision tasks, including:

- Image Classification: CNNs analyze the content of input images and assign them to predefined categories or classes based on learned features, achieving state-of-the-art performance in tasks such as object recognition and scene understanding.

- Object Detection: In object detection tasks, CNNs not only classify objects but also localize them within images, enabling applications such as autonomous driving, surveillance, and robotics.

- Facial Recognition: Leveraging the hierarchical representations learned by CNNs, facial recognition systems accurately identify and authenticate individuals from facial images, with applications spanning security, authentication, and personalization.

Handling Image Data and Complex Patterns

CNNs excel at handling image data and discerning complex patterns within images by:

- Automatically learning hierarchical representations of visual features through the successive layers of the network.

- Exploiting local correlations and spatial information present in images, enabling robust feature extraction and pattern recognition.

- Adapting to diverse datasets and challenging environments, making them versatile tools for addressing a wide range of real-world applications, from medical imaging to satellite imagery analysis.

Convolutional Neural Networks (CNNs) stand as the epitome of visual intelligence in the realm of artificial neural networks, revolutionizing the way machines perceive and interpret visual information. With their unparalleled capabilities in image analysis and processing, CNNs continue to push the boundaries of computer vision and drive innovation across various industries and domains.

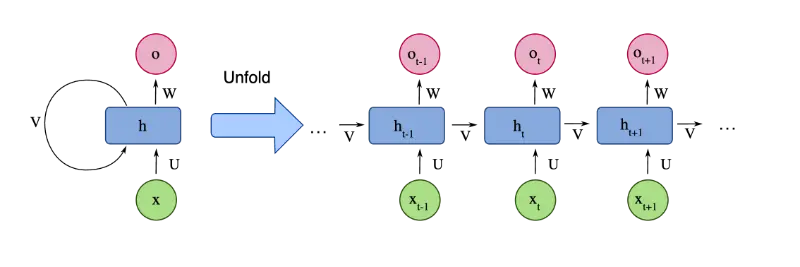

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are suited for processing sequential data by incorporating feedback loops and memory cells. With their ability to capture temporal dependencies and contextual information, RNNs have found widespread applications in diverse domains, ranging from natural language processing to time series analysis.

RNNs are a class of neural network architectures designed to operate on sequential data, where the output at each time step is dependent not only on the current input but also on the previous inputs and hidden states. This recurrent connectivity enables RNNs to capture long-term dependencies and dynamic patterns inherent in sequential data.

Description of Feedback Loops and Memory Cells

- RNNs are characterized by feedback loops that allow information to persist over time, enabling the network to maintain an internal state or memory of past inputs.

- Memory cells, such as the Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), serve as the building blocks of RNNs, facilitating the storage and retrieval of information over multiple time steps.

Applications in Time Series Data and Natural Language Processing

RNNs find extensive use in:

- Time Series Analysis: RNNs excel at modeling and forecasting sequential data, making them invaluable tools for tasks such as financial forecasting, weather prediction, and stock market analysis.

- Natural Language Processing: RNNs are widely employed in language modeling, text generation, sentiment analysis, and machine translation, leveraging their ability to capture semantic and syntactic structures in textual data.

RNNs play a pivotal role in various applications, including:

- Machine Translation: RNNs power machine translation systems by processing input sequences in one language and generating corresponding sequences in another language, enabling cross-lingual communication and localization.

- Speech Recognition: RNNs are instrumental in converting audio signals into textual representations, enabling accurate speech recognition and transcription in applications such as virtual assistants, dictation software, and voice-controlled devices.

RNNs excel at handling temporal sequences by:

- Dynamically adjusting their internal states and parameters based on the input at each time step, allowing them to adapt to varying sequence lengths and dynamics.

- Capturing long-range dependencies and contextual information across multiple time steps, facilitating tasks that require an understanding of sequential relationships and temporal dynamics.

RNNs are uniquely tailored to process sequential data and capture temporal dynamics. With RNN ability to incorporate feedback loops and memory cells, RNNs continue to drive innovation in diverse fields, enabling groundbreaking advancements in time series analysis, natural language processing, and beyond.

Deep Neural Networks (DNNs)

Deep Neural Networks (DNNs) use the power of deep learning algorithms to unearth complex patterns and relationships within data. With their hierarchical architecture comprising multiple layers, DNNs have revolutionized various domains, offering unparalleled capabilities in tackling intricate tasks and handling vast amounts of data.

DNNs are a sophisticated class of artificial neural networks characterized by their depth, achieved through the stacking of multiple layers of neurons. This deep architecture allows DNNs to learn hierarchical representations of data, enabling them to capture intricate patterns and relationships that may be elusive to shallow networks.

Deep Layers for Learning Complex Relationships

The architecture of DNNs consists of multiple hidden layers sandwiched between input and output layers, each layer progressively learning abstract features from the input data. This hierarchical representation allows DNNs to capture intricate relationships and dependencies within the data, facilitating more accurate predictions and classifications.

Applications in Complex Tasks

DNNs find widespread applications in tackling complex tasks, including:

- Medical Diagnosis: DNNs analyze medical imaging data to assist in diagnosing diseases such as cancer, Alzheimer’s, and heart conditions, offering insights that aid healthcare professionals in making informed decisions.

- Social Network Analysis: DNNs mine vast amounts of social media data to uncover patterns of user behavior, sentiment analysis, and network structures, enabling businesses and organizations to better understand their audience and tailor their strategies accordingly.

Handling Large Amounts of Data and Real-world Applications

DNNs excel at processing large volumes of data and are instrumental in various real-world applications, including:

- Financial forecasting

- Autonomous vehicles

- Energy grid optimization

- Climate modeling

- Drug discovery

- Fraud detection

- Robotics

Modular and Specialized Neural Networks

Modular and specialized neural networks represent a paradigm shift in the realm of artificial intelligence, offering tailored solutions to address specific problems and tasks. These networks leverage modular architectures and specialized design principles to achieve remarkable performance in diverse domains, from image generation to data synthesis.

Modular neural networks are characterized by their modular architecture, which comprises interconnected modules or sub-networks, each responsible for specific functionalities or tasks. These modules can be independently trained and optimized before being integrated into the larger network, allowing for greater flexibility and scalability in network design.

Description of Specialized Networks

Specialized networks, such as Radial Basis Function Networks (RBFNs) and Generative Adversarial Networks (GANs), are designed to excel in specific tasks:

- Radial Basis Function Network (RBFN): RBFNs are particularly well-suited for function approximation and regression tasks, leveraging radial basis functions to model complex relationships between input and output variables.

- Generative Adversarial Networks (GANs): GANs consist of two neural networks, a generator and a discriminator, engaged in a adversarial training process. GANs are renowned for their ability to generate realistic data samples, making them invaluable tools for tasks such as image generation, data synthesis, and anomaly detection.

Use Cases and Applications

Modular and specialized neural networks find applications in various domains, including:

- Image Generation: GANs are widely used for generating realistic images, enabling applications in computer graphics, art generation, and virtual reality.

- Data Synthesis: RBFNs and GANs can synthesize data samples that closely resemble the distribution of real-world data, facilitating tasks such as data augmentation, anomaly detection, and simulation.

- Anomaly Detection: Specialized networks can detect anomalies in complex datasets, such as fraudulent transactions in financial data or anomalous patterns in sensor data, enabling proactive risk management and fraud prevention.

Tailoring Networks for Specific Problems and Tasks

By tailoring neural network architectures to suit specific problems and tasks, researchers and practitioners can achieve superior performance and efficiency compared to one-size-fits-all approaches. This customization involves:

- Selecting appropriate network architectures, activation functions, and optimization algorithms based on the nature of the problem and the characteristics of the data.

- Fine-tuning hyperparameters and adjusting network configurations to optimize performance metrics such as accuracy, precision, and recall.

Training and Learning Process

Neural networks undergo a dynamic learning process to acquire the ability to perform complex tasks and make accurate predictions from data. This process involves several key steps, including data ingestion, parameter optimization, and iterative refinement, guided by the expertise of data scientists.

Overview of the Learning Process in Neural Networks

The learning process in neural networks involves presenting the network with training data and adjusting its parameters iteratively to minimize a defined error metric, typically referred to as the loss function. Through this iterative optimization process, the network learns to map input data to output predictions, gradually improving its performance over time.

Training Data and Loss Functions

Training data comprises a set of input-output pairs used to train the neural network. The network learns from these examples by comparing its predictions to the ground truth outputs and adjusting its parameters to minimize the discrepancy, as measured by the loss function. Common loss functions include mean squared error (MSE) for regression tasks and categorical cross-entropy for classification tasks.

Backpropagation Algorithm

Backpropagation is a fundamental algorithm used to train neural networks by computing gradients of the loss function with respect to the network’s parameters. These gradients indicate the direction and magnitude of parameter updates needed to minimize the loss. Backpropagation involves propagating error gradients backward through the network, from the output layer to the input layer, and adjusting the parameters accordingly using optimization algorithms such as gradient descent.

Optimizing Network Parameters: Weights and Biases

During training, the network’s parameters, including weights and biases, are optimized to minimize the loss function. Optimization algorithms, such as stochastic gradient descent (SGD) or Adam, iteratively adjust these parameters based on the computed gradients, gradually converging towards optimal values that minimize prediction errors.

Conclusion

The diverse neural network models showcased in this article underscores the transformative power of artificial intelligence and machine learning. From the foundational multilayer perceptrons to the cutting-edge convolutional and recurrent neural networks, each model serves as a testament to human ingenuity and technological advancement. As we continue to push the boundaries of neural network research, addressing challenges such as scalability and exploring new frontiers in architecture and algorithms, the potential for innovation remains boundless. With dedicated researchers and pioneers like Yann LeCun leading the charge, the future of neural networks holds promise for solving complex problems, driving progress, and shaping the landscape of artificial intelligence for years to come.