As artificial intelligence rapidly evolves, one of the most groundbreaking advancements is the emergence of Agentic AI systems. Unlike traditional AI models that are task-specific and reactive, Agentic AI is autonomous, goal-directed, and capable of initiating action based on context. To support such capabilities, a robust and modular Agentic AI systems architecture is essential.

In this blog post, we’ll explore what Agentic AI is, break down the key components of its architecture, and analyze how to design scalable, intelligent systems that mimic human-like agency. Whether you’re a machine learning engineer or a curious tech enthusiast, this guide offers a deep dive into the design blueprints of next-generation AI.

What Is Agentic AI?

Agentic AI refers to AI systems that act like intelligent agents. These agents aren’t just passive models that respond to inputs; they:

- Set goals

- Make decisions proactively

- Reflect on outcomes

- Learn from past actions

- Adapt to changing environments

In essence, they function like autonomous entities that navigate a problem space, adjusting plans and behaviors as needed.

This is fundamentally different from traditional or generative AI, which typically require external prompts or are confined to narrow contexts.

Why Agentic AI Needs Specialized Architecture

A reactive chatbot can run on a basic LLM backend. But an agentic system — say, a financial advisor that monitors market trends, makes portfolio decisions, and learns from user feedback — requires a multi-layered, modular architecture. The system needs memory, planning capability, tool usage, and autonomy, often across real-time and asynchronous modes.

Without a well-thought-out architecture, such systems become brittle, unscalable, or unsafe.

Core Principles of Agentic AI Architecture

Before diving into the specific components, it’s important to establish some architectural principles unique to agentic systems:

- Autonomy: The system should decide on tasks without human prompts.

- Reactivity + Proactivity: The agent must respond to inputs but also initiate actions.

- Modularity: Different functions (e.g., memory, planning, execution) should be decoupled and extensible.

- Reflection: Agents should evaluate outcomes and adjust behavior.

- Tool Use: Integration with external APIs, databases, or search engines is often critical.

With those principles in mind, let’s now examine the core components.

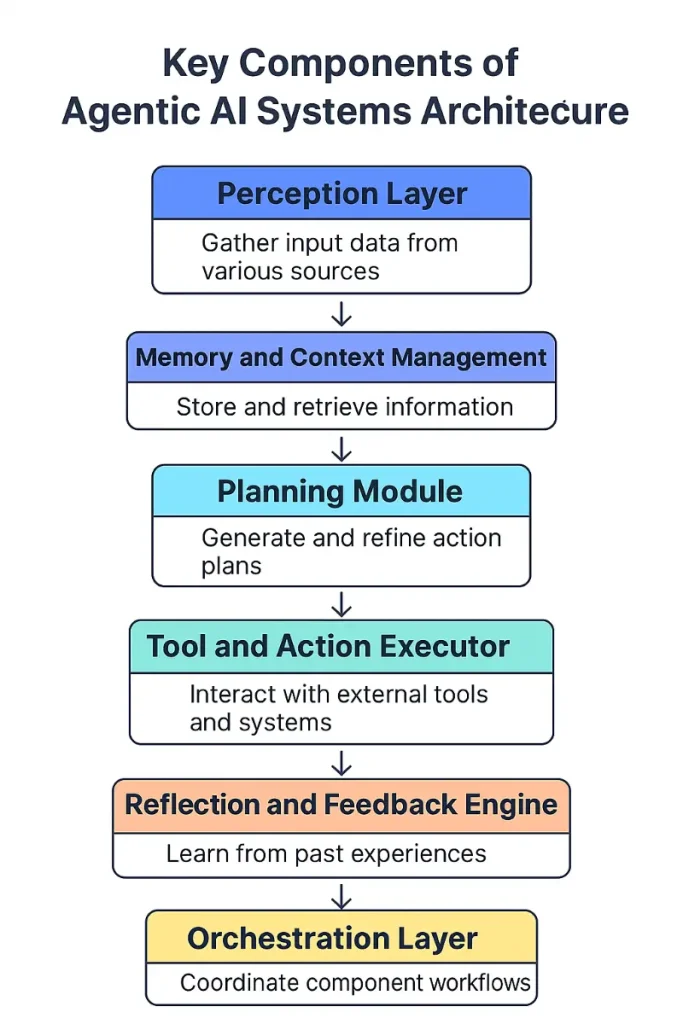

Key Components of Agentic AI Systems Architecture

Designing a robust Agentic AI system goes far beyond just plugging in a large language model (LLM). Agentic systems must perceive their environment, store and retrieve context, plan multi-step tasks, take actions, and learn from outcomes — all autonomously. This demands a layered architecture where each component plays a critical role in creating intelligent, adaptive behavior.

Let’s dive deeper into the essential components that form the backbone of Agentic AI systems:

1. Perception Layer

The perception layer is the agent’s window to the world. It’s responsible for gathering and interpreting inputs — be it text, images, sensor data, API responses, or user commands. In many LLM-based systems, this primarily involves natural language inputs via chat interfaces or API payloads. However, agentic systems often consume a richer set of inputs:

- Text and voice commands from users

- Structured data such as database entries or tabular files

- Web scraping results for real-time updates

- Sensor inputs in IoT or robotics-based agents

- Multimodal inputs, including images or video feeds

Once data is ingested, it goes through preprocessing steps like language parsing, sentiment analysis, object detection (for visual inputs), or vector embedding generation. This layer ensures the agent receives meaningful, normalized inputs that downstream components can process effectively.

2. Memory and Context Management

Unlike reactive AI models, agentic systems must remember past interactions, learn from them, and adapt behavior. Memory isn’t optional — it’s foundational.

There are generally three types of memory in agentic systems:

- Short-term (working) memory: Used during the current session or task. Think of it as a scratchpad where intermediate reasoning steps, hypotheses, and partial conclusions are stored.

- Long-term memory: Stores knowledge across sessions, allowing the agent to recall previous outcomes, user preferences, goals, and context. Typically implemented with vector databases (e.g., FAISS, Pinecone, Weaviate), this memory supports semantic search for past events or documents.

- Structured memory: Some data, such as metadata, logs, or state checkpoints, may be stored in structured formats like relational databases or JSON objects.

This layered memory structure ensures continuity, allowing the agent to evolve and refine its performance over time.

3. Planning Module

The planning module is where an agent’s intelligence begins to shine. It’s responsible for breaking down high-level goals into smaller sub-goals and tasks — often over multiple steps or iterations. The agent needs to decide:

- What tasks must be done first?

- Which tools or resources are required?

- What success looks like?

- How to adjust if the plan fails?

Planning can be implemented in several ways:

- Prompt chaining: Using frameworks like LangChain or LlamaIndex, agents can sequence prompt-based reasoning steps.

- Tree-of-Thought (ToT) and ReAct: These methods structure reasoning into branches, letting agents simulate possible action paths and evaluate them before acting.

- Finite State Machines or PDDL-based planners: In more structured environments (e.g., robotics), planners may use formal rules to transition between states.

This module makes the agent proactive rather than reactive — enabling it to initiate tasks without user input.

4. Tool and Action Executor

Once a plan is formed, the agent must act on it. That’s the role of the tool or action executor — a critical bridge between internal reasoning and the external world. This component lets the agent interact with:

- APIs: Call weather services, financial data providers, or any external source

- Databases: Run SQL queries, retrieve rows, or update records

- File systems: Read or write local or cloud-stored files

- Applications: Send emails, control Slack bots, interact with CRMs, or run code

- Web interfaces: Use browser automation to perform searches or scrape data

The executor module must include logging, retries, and validation to ensure robust operations. It should also include safeguards to prevent agents from overstepping permissions.

5. Feedback and Reflection Engine

No intelligent system is complete without self-evaluation. The reflection engine enables agents to:

- Review their actions and outputs

- Determine if objectives were met

- Adjust future decisions based on feedback

- Detect and avoid failure patterns

This may involve chain-of-thought self-assessment, user feedback ingestion, or reward modeling. In LLM-based agents, reflection is often achieved via specialized prompts that ask the model to critique or verify its own outputs. More advanced systems simulate counterfactuals — asking “What could I have done differently?” — to improve future decisions.

Reflection is what allows Agentic AI to learn and grow autonomously, making it fundamentally different from hardcoded logic or stateless AI services.

6. Orchestration Layer

Orchestration is the glue that binds all other components. This layer handles:

- Task scheduling: Especially important in multi-agent setups or long-running tasks

- Workflow management: Ensuring each module executes in the correct sequence

- Concurrency handling: When multiple agents or processes are running

- Context propagation: Passing information between modules while maintaining logical state

Popular orchestration frameworks include LangGraph, AutoGen, CrewAI, and traditional DAG managers like Airflow or Prefect (used when agents are part of larger pipelines).

In an enterprise context, the orchestration layer often interfaces with CI/CD systems, audit logs, and observability stacks to ensure transparency and control.

Technologies Used in Agentic Architectures

Here’s a non-exhaustive list of popular tools and frameworks:

- LLM backbones: GPT-4, Claude, LLaMA, Mistral

- Planning & chaining: LangChain, CrewAI, LangGraph, MetaGPT

- Memory: FAISS, Weaviate, Pinecone, Redis

- Execution: Python SDKs, REST APIs, Selenium, OpenAI functions

- Reflection: ReAct, Self-Ask, Tree-of-Thought

- Orchestration: Airflow, Prefect, Dagster, or custom agents

Cloud services like AWS Bedrock, Azure OpenAI, and Google Vertex AI also offer scalable deployment pipelines.

Design Considerations and Best Practices

- Security: Agentic systems can access tools. Limit their action scope and verify intent.

- Explainability: Use logging and trace visualization to understand agent decisions.

- Fail-safes: Implement timeouts, error handlers, and guardrails to prevent runaway behavior.

- Human-in-the-loop: For high-stakes tasks (e.g., finance, healthcare), always include a checkpoint for human approval.

Future of Agentic AI Architectures

As agentic AI matures, architectures will evolve to include:

- Multi-agent collaboration: Agents that specialize in tasks and coordinate as a team.

- Emotion-aware systems: Especially in health and education contexts.

- Ethical reasoning engines: To ensure actions align with values and policies.

- Autonomous learning agents: Systems that rewrite their own prompts or goals over time.

Final Thoughts

Building agentic AI systems is not just about chaining prompts. It requires a thoughtful, modular architecture that balances autonomy with control. The Agentic AI systems architecture must enable perception, planning, execution, reflection, and orchestration — forming a true loop of intelligent behavior.

If you’re designing next-gen AI products or enterprise automations, understanding and implementing agentic architecture is your competitive edge.